At its annual Build developer conference in Seattle, Microsoft is making a series of announcements. A significant portion of these involve copilots, AI-powered assistants for all kinds of applications. The headline grabber: Windows Copilot, the first OS-level AI aid. Microsoft intends to use AI hardware in both the cloud and locally, where possible. However, the Redmond tech giant is not just planning to build its own copilots for its own software suite. Now it’s also up to outside developers to add AI bots, the company believes, and that requires a framework.

Those who think of the word “copilot” quickly turn to GitHub Copilot, which emerged two years ago. This is an AI assistant that helps programmers write code. Not too long ago, GitHub unveiled a significant expansion of capabilities within the Copilot offering: Copilot X. Still, people in Redmond will want us all to think more broadly about the concept of a “copilot”. It defines it as an “application that uses modern AI and large language models to assist you with a complex cognitive task.”

Today, the GPT-4 technology that it is allowed to deploy thanks to investments in OpenAI ends up everywhere within the MS suite. After equipping all Microsoft 365 applications with the AI tool, it is now the turn of the tech giant’s flagship: Windows itself. It also announced new Copilot functionality in Microsoft Edge during Build.

Rumors regarding the AI-focused nature of Windows 12 have been circulating for ages. However, Microsoft is not going to wait for its launch (presumably 2024) to offer OS-level AI. Based on OpenAI technology, it is coming out with the first PC platform to offer “centralized AI assistance.”

Windows Copilot: from simple to complex

Copilot for Windows 11 has two sides: for simple use cases and for the benefit of more complex tasks. It will be introduced in June. The tool is invoked via a central button on the taskbar, an indication of the importance Microsoft places on copilot. Once invoked, Copilot remains available as a sidebar on all apps, programs and windows. Familiar Windows actions such as copy/paste can result in human-like summaries, additions and corrections with Copilot’s help. Plugins from Bing Chat and ChatGPT are of use to regular end users, but this is where Microsoft touts the opportunity for developers to take advantage of the new capabilities.

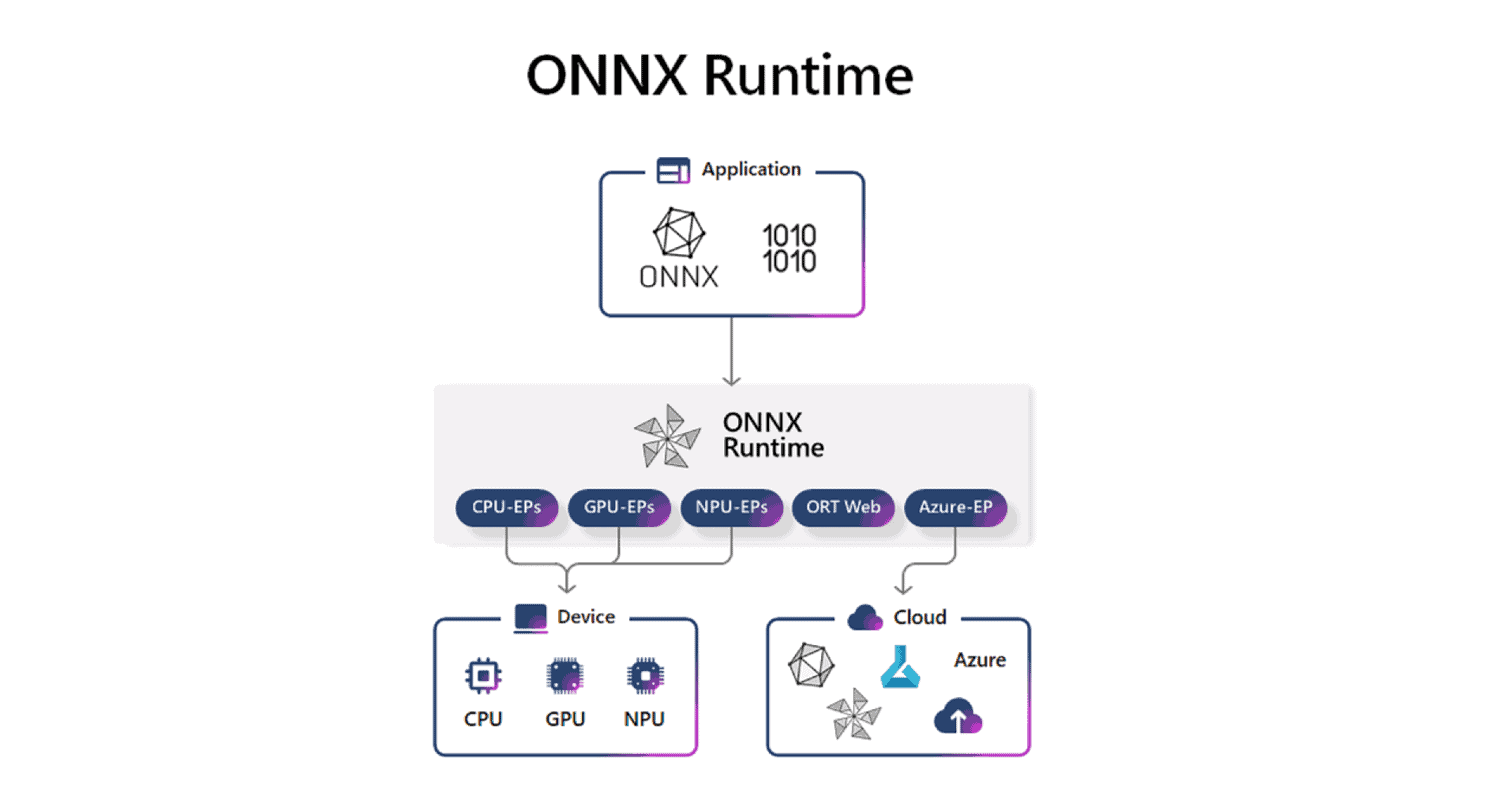

Microsoft wants to democratize AI development. One way it seeks to accomplish this is by making it easier to run AI applications on its own platforms. ONNX Runtime allows developers to run AI models both on a local basis and in the cloud. This works dynamically and can be done in hybrid form. That is, there are Azure resources ready to accelerate AI, although Microsoft itself elaborates that there is more and more hardware under development that is suitable for local AI computation. For developers, it would be possible to enable the runtime with just a few lines of programming code to have AI acceleration handled by the ONNX Runtime.

The company is referring to what it calls NPUs: Neural Processing Units. These are already in the Qualcomm Snapdragon 8xc Gen3 platform, but other chip giants like Intel and AMD are busy baking in AI acceleration as well. Highly needed if Microsoft’s AI vision becomes a reality. This since the cloud will not be able to feed all the AI applications of Windows users at the same time.

Spec

With the knowledge that Copilot is already going to be available at the OS level, it is important to mention that Microsoft is not centralizing all aspects of it with the move. From the tech giant’s own explanation, it is readily apparent that it is building on an earlier concept: Copilot X. For GitHub users, the “X” here means that the AI assistant would be capable of anything. This was just about a specific application each time. There’s good reason for doing it this way: an AI application works much better when it is focused on a single task. Anyone who has ever wanted to use ChatGPT for help with coding will soon recognize that GitHub Copilot is a much better assistant that actually provides useful information. In such a specified instance, the AI chatbot gets a set of instructions before the user starts the conversation so that it stays on the topic of conversation at hand. For example, you won’t get information about snakes if you ask a question about Python. At least, that’s the idea.

For that reason, we keep seeing new Copilot applications appear out of Microsoft’s top hat and we can expect Redmond to do so in the future as well. Currently, it is also introducing Copilot in Power BI, Power Pages and the newly launched data platform Microsoft Fabric. Along with this, it is coming out with plugins that should make Copilot more useful when it comes to external software. That way, AI assistance should appear not only at the OS level and in MS apps, but also with third-party software.

AI plugins and the Copilot ecosystem

Clearly, Microsoft wants to avoid an oncoming incompatibility problem. Even though it has a comprehensive alliance with OpenAI, it came out with its own plugin for Bing that was separate from the ChatGPT equivalent. Pretty awkward, since both Bing Chat and ChatGPT run on OpenAI models and therefore share many similarities. It has now announced that it will use the same plugin standard as OpenAI.

These plugins are potentially very useful. Microsoft would like people to see them as a bridge between large AI models and a company’s private data. Pretty logical that there shouldn’t be an immediate connection between them. Consider the leak of sensitive data by Samsung employees who chucked such data into a ChatGPT window. That’s clearly not intended to occur again on Microsoft’s watch, and plugins should ensure that the AI model only accesses relevant data when it receives a specific query about it. The chatbot collects the data in real time. Microsoft CTO Kevin Scott says that a plugin is about how you, the copilot developer, give your copilot or AI system the capabilities that it hasn’t shown yet. It’s about connecting with your data and the systems you build. “I think eventually there will be an incredibly rich ecosystem of plugins,” he stated.

Microsoft also wants to allow external plugins into its own software suite. It is coming up with a number of options within Visual Studio Code, GitHub Copilot and GitHub Codespaces to build, test and deploy new plugins. All of this can run on Azure AI hardware in the cloud. Microsoft promises that this framework ensures the broad deployment of plugins in the MS suite.

Everyone a copilot?

As comprehensive as Microsoft’s suite is, for many use cases it will be important to be able to deploy a copilot independent of these services. Scott sees the development of new copilot-related experiences as huge. He compares it to the advent of smartphones, an invention that we can say changed the world significantly.

Now we end up with a point that clarifies the nature of “copilots”. At least, how Microsoft looks at it. Until now, the concept has been an application of generative AI defined by the field in which it entered. For example, you can think of GitHub Copilot as a focused chatbot for programming code in the same way that an Office Copilot offers help with Word, Excel or PowerPoint. In other words, a “copilot” is a chatbot with a job. It also shows why we cannot expect ChatGPT to suddenly perform all tasks on the basis of GPT-5, GPT-6 or GPT-19. In fact, developers will always be needed to retrain the AI to be a copilot, whether they work for Microsoft or not.

Also read: GitHub Copilot X: productivity aid or a threat to developers?