AWS is now offering Meta’s recently disclosed LLaMA 2 AI models through Amazon SageMaker JumpStart. This should make it easier for users to develop and deploy ML solutions.

Meta recently made its large LLaMA 2 AI models, developed in collaboration with Microsoft, public. These are generative text or LLM models, like the LLaMA 2 chat dialog tool, with 7, 13 and 70 billion parameters. The models are trained on a total of 2 trillion tokens of data from public sources.

Llama 2 and AWS SageMaker JumpStart

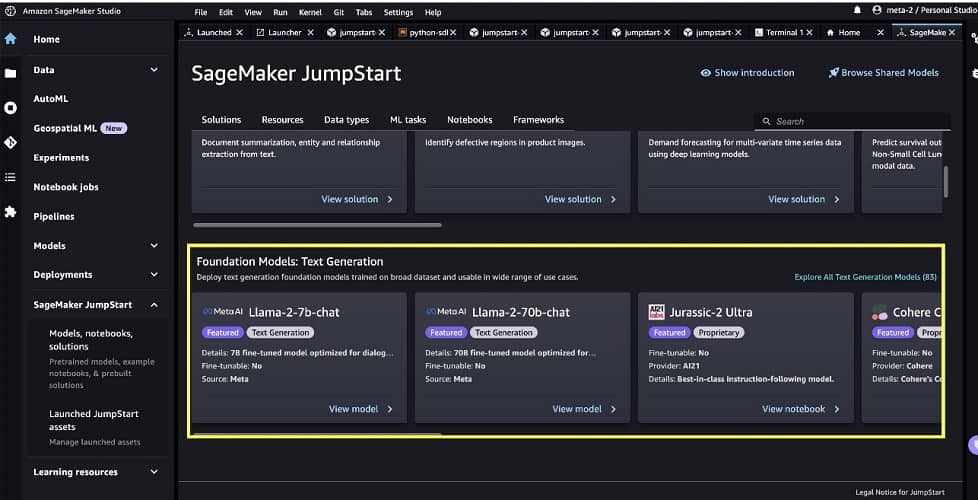

Now that AWS is making the models available in Amazon SageMaker JumpStart, the intention is to make it easier for end users to use Meta and Microsoft’s LLM models. The AWS solution is an ML hub that provides access to algorithms, ML models and various other ML solutions. This is to help them get started with ML faster.

Among other things, AWS SageMaker JumpStart allows them to choose from various foundation AI models, which now include LLaMA 2, and deploy them to specific Amazon SageMaker instances.

In different AWS regions

Those interested in the LLaMA 2 models can now discover and deploy them in a few clicks in Amazon SageMaker Studio or via the SageMaker Python SDK. This will include, in addition to using the models, functionality such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger or container logs.

The LLaMA 2 models from Meta and Microsoft are now available for Amazon SageMaker Studio in the AWS regions US East 1, West 2, Europe West 1 and Asia-Pacific Southeast 1.