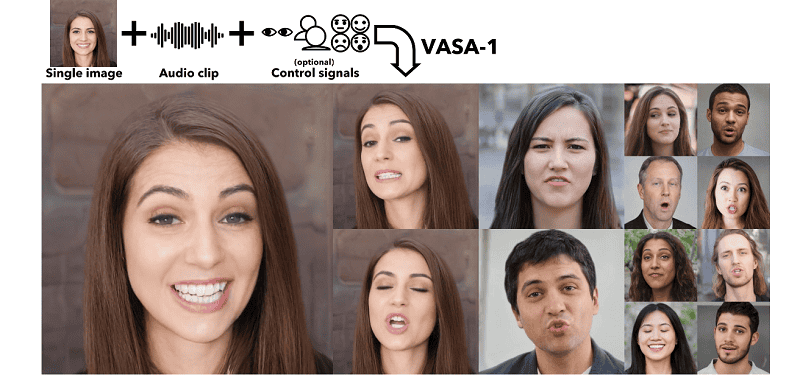

Microsoft recently published a study in which the company presented the AI model VASA-1. This model uses portrait photos and associated audio files to show realistic “talking heads”. This technology offers creative options, but carries serious risks.

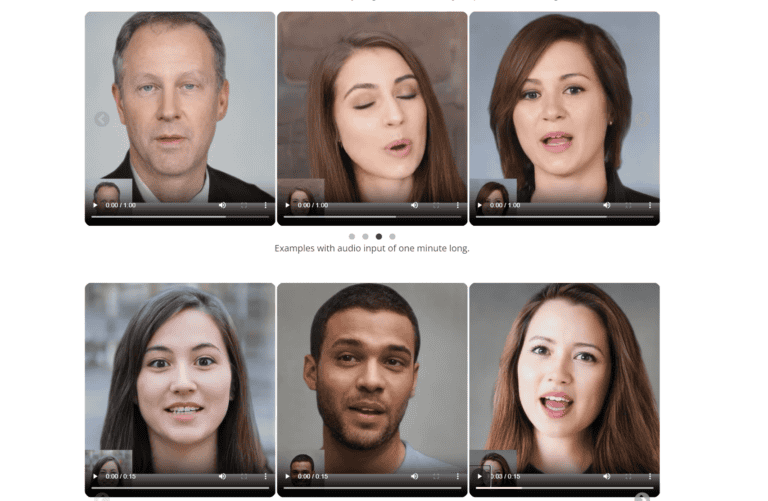

The VASA-1 AI model is still in a research phase. Microsoft does already show that it can make portrait photos of people talk ‘realistically’ in combination with audio files. The facial expressions shown are context sensitive, adapting to the detected tone of the audio.

Very realistic

The individuals in the portrait photographs used do not have to look directly into the camera. In addition, the AI model has many features such as determining eye gaze, head distance and even emotional expressions.

This gives the processed images a very realistic “look and feel” when they appear to be talking, Microsoft states. The technology enables these “talking pictures” to sing songs, among other things.

According to Microsoft, VASA-1 was designed specifically for animating virtual characters. The images released by the tech giant at the inquiry are said to be virtual examples created with OpenAI’s DALL-E.

Use cases and serious risks

The new technology obviously offers many possible uses. Obviously, it can be used to develop more realistic AI characters, complete with “normal” lip-synching and facial expressions for more depth. It also makes it possible to create avatars for social media videos. Microsoft itself also came up with having the Mona Lisa sing as a striking example of the very varied ways the technology can be used.

Yet there are also risks associated with this new AI technology. If the technology were publicly available, it could lead directly to much more convincing deepfakes. The very potential malicious use of the technology is a reason for Microsoft to keep the specific details of VASA-1 to itself for now. In doing so, the researchers warn that although the technology has good intentions especially for the creative sector, the dangers of misuse are most certainly lurking.

Also read: French AI startup Mistral AI again looking for investors