AI search engines are giving fewer and fewer correct answers about news sources. More than 60 percent of queries on them produced incorrect answers, according to research by the journalism trade journal Columbia Journalism Review.

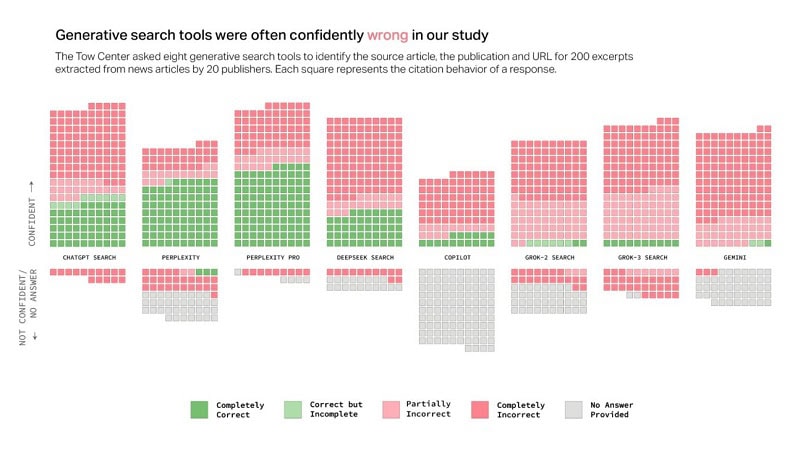

According to researchers at the Tow Center for Digital Journalism, AI search engines have serious problems correctly representing news sources. An analysis of eight AI search engines with “live search” functionality found that the underlying language models gave incorrect information in more than 60 percent of cases.

Large differences between AI search engines

The researchers tested the AI models by entering excerpts from real news articles. They then asked the AI search engines to identify the article’s headline, original publisher, publication date and URL. A total of 1,600 search queries were performed across eight generative search engines.

The error rates varied widely by platform. Grok 3 from xAI scored the worst with an error rate of 94 percent. ChatGPT gave an incorrect answer in 134 of 200 items surveyed, representing 67 percent. Perplexity AI performed remarkably better with a 37 percent error rate.

Interestingly, paid versions of the AI search engines performed even worse. For example, Perplexity Pro and the premium version of Grok 3 gave incorrect answers more often than their free counterparts. Although these versions could handle more prompts, publishing uncertain answers led to a higher error rate.

Causes of incorrect answers

The researchers also examined the causes of the high error rates. Instead of providing no answer for lack of information, AI search engines often generate incorrect or speculative answers. This problem was evident across all platforms tested.

In addition, some AI tools ignore the Robot Exclusion Protocol, which publishers set up to block unwanted access. For example, the free version of Perplexity AI recognized all ten excerpts from National Geographic articles, even though this content is behind a paywall.

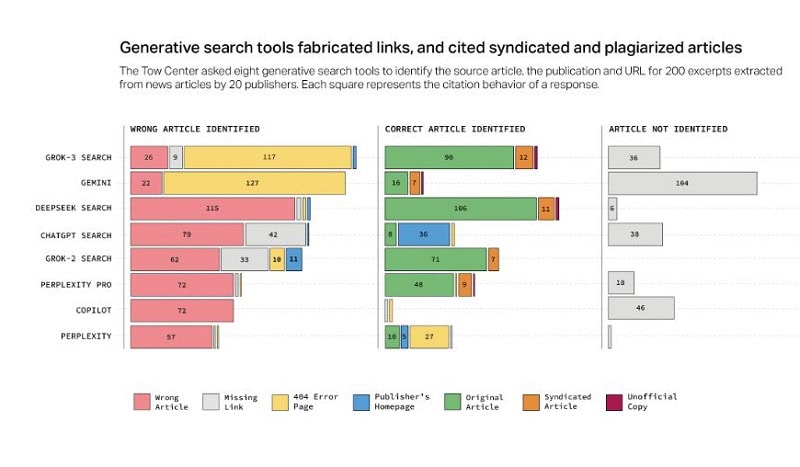

Furthermore, AI search engines often refer to syndicated versions of news articles rather than the original source. This happens, for example, when Yahoo News reposts articles. Even when publishers have agreements with AI developers about content usage, original sources are sometimes skipped.

Another major problem is URL fabrication. More than half of the responses from Google Gemini and Grok 3 directed users to fabricated or broken URLs. This redirects data traffic without visiting the source websites.

Growing problem

The researchers conclude that these errors put news publishers in a difficult position. If they block AI crawlers, they risk becoming less visible. If they allow AI search engines, they risk having their content misrepresented and losing data traffic.

For users, high error rates are also problematic, as the reliability of AI search engines is called into question. This is especially worrisome since one in four Americans now use AI tools as an alternative to traditional search engines.

Also read: Hugging Face rates AI models on their medical reliability