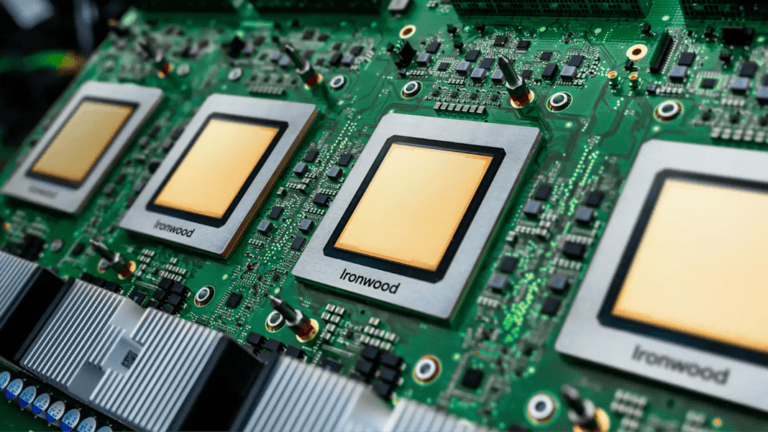

During Google Cloud Next in Las Vegas, Google unveiled its latest Tensor Processing Unit (TPU): Ironwood. This seventh-generation TPU is the most powerful and most scalable AI chip that Google has ever developed and is specially designed for inferencing. According to Google, it marks the beginning of a new era in which AI proactively generates insights instead of just reactively processing information.

Ironwood was built to support the next stage of generative AI, in which artificial intelligence not only provides answers based on existing data, but also proactively collects and interprets data to provide better insights. Google calls this the “age of inference,” in which AI agents independently retrieve and generate data to provide insights.

This new AI accelerator can scale up to no fewer than 9216 chips connected via a revolutionary Inter-Chip Interconnect (ICI) with a capacity of almost 10 MW. This allows Ironwood to deliver unprecedented computing power for the most demanding AI workloads.

Enormous computing power for complex AI models

Ironwood is designed to perform the complex calculations of “thinking models” such as Large Language Models (LLMs) and Mixture of Experts (MoEs). For Google Cloud customers, the TPU comes in two configurations: a 256-chip variant and a 9,216-chip variant.

The largest configuration provides as much as 42.5 Exaflops of computing power, which, according to Google, is more than 24 times more powerful than the world’s largest supercomputer, El Capitan, which offers ‘only’ 1.7 Exaflops per pod. Each individual chip provides 4614 TFLOPs of processing power. This is a huge improvement over previous generations of TPUs that Google developed together with Broadcom.

Ironwood TPU is more efficient and has more memory

Google has not only improved its computing power but has also focused on energy efficiency. Ironwood is twice as energy-efficient as Trillium, the sixth-generation TPU announced last year. This is important when available energy is one of the limiting factors for AI capabilities.

In addition, Ironwood offers 192 GB High Bandwidth Memory (HBM) per chip, six times more than Trillium. The HBM bandwidth has also been significantly improved and reaches 7.4 Tbps per chip, 4.5 times as much as its predecessor. The improved Inter-Chip Interconnect (ICI) bandwidth has been increased to 1.2 Tbps bidirectional, which is 1.5 times faster than with Trillium.

This new AI accelerator builds on previous generations of TPUs that Google Cloud has used to accelerate AI development.

The future of AI chips

According to Google, Ironwood represents a unique breakthrough in the era of inference. It offers increased computing power, memory capacity, ICI network improvements, and reliability. Combined with an almost twofold improvement in energy efficiency, customers can now run training and inference workloads with the highest performance and lowest latency.

Leading AI models like Gemini 2.5 and the Nobel Prize-winning AlphaFold are already running on these TPUs. Google expects Ironwood, which will be available later this year, to enable many more AI breakthroughs, both within Google itself and for Google Cloud customers.